Federated Learning at the Resource-Constrained Edge

Federated learning of large models face huge challenges at the edge. Edge users usually do not own sufficient computation power, making it difficult to train large models. In this project, we develop a new methodology that can train large models at the resource-constrained edge via a sub-model training method.

Overview

At a high level, we aim to decompose a large model into small sub-models and let each edge user train a sub-model. Then the server aggregate all sub-models to reconstruct a full model.

However, different from other sub-model training methods, we create sub-models to ensure that

-

all sub-models are approximations to the full model

-

all sub-model covers different part of the full model

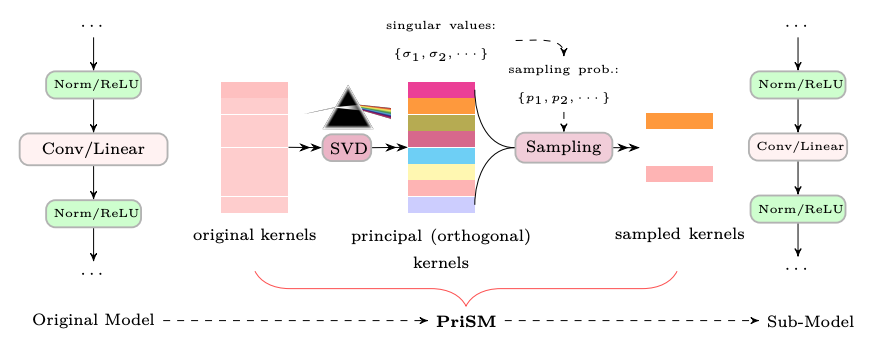

Sub-Model Creation

We first use SVD to convert kernels in a NN layer to principal kernels, with singular values indicate the importance of each principal kernel. Then we adopt a novel sampling process to sampling kernels based on singular values, so that

Principal kernels with large singular values have higher probability to be sampled.

In this way, we can ensure 1) all sub-models approximate to the full model; 2) different sub-models are created with different kernels.

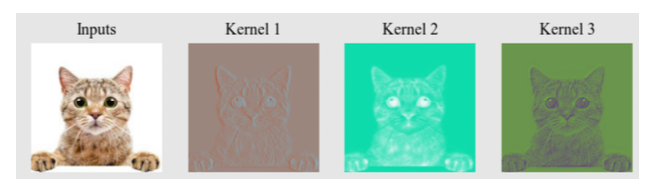

Reasoning

Each principal captures distinct features in the input. Therefore, by distributing the principal to different clients, we allow different clients to learn different feature extractors. Then, the server aggregates these feature extractor to obtain the final learned model.

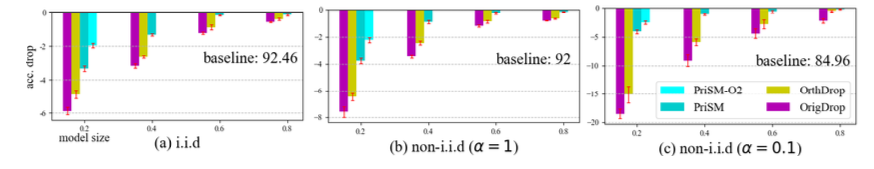

Result Highlights

We show on multiple models and datasets, that our proposed method achieves comparable accuracy as the full-model training, by allowing edge users to train only a small sub-model.

Model accurach on CIFAR-10 with ResNet-18

Cite

@article{TMLR2023PriSM,

title={Overcoming resource constraints in federated learning: Large models can be trained with only weak clients},

author={Niu, Yue and Prakash, Saurav and Kundu, Souvik and Lee, Sunwoo and Avestimehr, Salman},

journal={Transactions on Machine Learning Research},

year={2023}

}