Private Learning with Asymmetric Flows

Training and inference costs have always been the central problem when deploy machine learning (ML) systems. This issue is even more urgent when ML meets privacy.

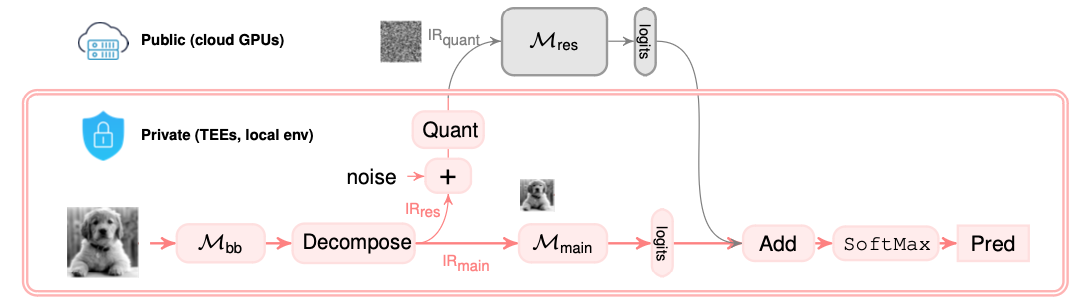

In this project, we explore a new private learning setting, where there are a public platform (e.g. cloud GPUs) and a private platform (e.g., local environment). We want to develop a new learning and inference framework that is aimed to achieve efficient training while still maintain strong privacy protection.

Overview

We first use two asymmetric data decomposition: singular value decomposition (SVD) and discrete cosine transform (DCT) to extract the low-dimensional main representation of input and the residual part.

We design two seperated model to learning the low-dimensional representation and the residual part. The model that learns low-dimensional representation run in private environment; while the model that learns residuals runs in the public environment. Therefore, both efficiency and privacy is achieved.

Asymmetric Data Decomposition

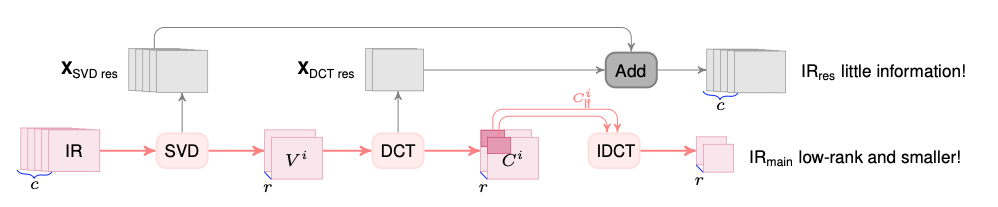

We first use SVD to extract principal channels. Due to high correlation among channels, we only need a few principal channels to approximate the original data.

Then we use DCT to reduce the feature size for each channel. DCT is very effective in explore spatial correlation.

We offload the rest of data to the residual part.

Low-Dimensional Model Design

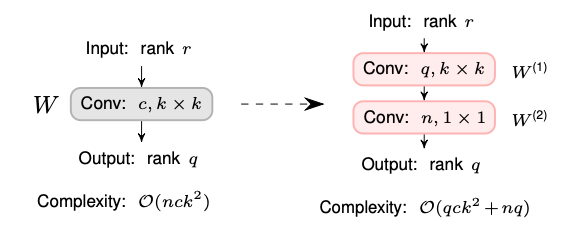

We theoretically show that given low-rank and low-dimensional input data, we can design a very small model to effectively learn the input with high model performance.

Model Accuracy

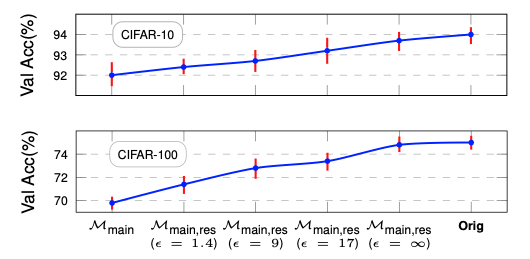

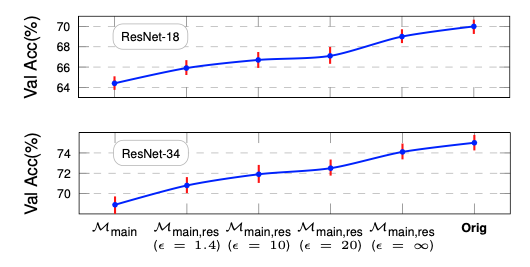

We show that with small model learning low-dimensional representation, we can still preserve the model accuracy with strong privacy protection.

Model accuracy on CIFAR-10/100 dataset

Model accuracy on ImageNet dataset

Attack Performance

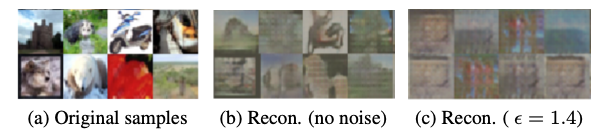

We also investigate whether an attacker can reconstruct the raw data given public residuals. We show that given noise added to the residuals (thanks to DP), we can effectively protect the raw data.

Citation

@misc{CVPR2024Delta,

title={All Rivers Run to the Sea: Private Learning with Asymmetric Flows},

author={Yue Niu and Ramy E. Ali and Saurav Prakash and Salman Avestimehr},

year={2024},

eprint={2312.05264},

archivePrefix={arXiv},

primaryClass={cs.CR}

}

@article{PETS2022AsymML,

title={3LegRace: Privacy-Preserving DNN Training over TEEs and GPUs},

author={Niu, Yue and Ali, Ramy E and Avestimehr, Salman},

journal={Proceedings on Privacy Enhancing Technologies},

volume={4},

pages={183--203},

year={2022}

}